Fact-Checking Claims By Cybernews: The 16 Billion Record Data Breach That Wasn't

From dumps with no login credentials at all, to direct connections to txtbase leaks from Telegram and old breaches, Cybernews tried to pass this as new and unseen, but data says otherwise.

Disclaimer: This post is quite long as we tried to include most of the information we gathered that we haven’t seen discussed online. You are free to use any of the information here as long as you credit this post and author.

Background

On June 18th, Cybernews published an article titled “The 16-billion-record data breach that no one’s ever heard of”.

The initial post didn’t provide much information on the servers they were using for the article; there were no IP addresses or indice names, just counts. What the article did provide, however, was enough information to know that this wasn’t even “a data breach.” It was a collection of leaks and breaches that had nothing in common other than that they were added into a spreadsheet by the same researcher.

No wonder no one had ever heard of the 16-billion-record data breach. It never existed.

I knew I would be able to get information about at least some of the data because I monitor ElasticSearch as well, but data in these servers gets wiped frequently by wiperware, and since not every dataset is static and records are being imported at times, searching just with counts isn’t exactly foolproof.

When Cybernews updated the post to show the indice names, making it easier to identify and match servers, I messaged my friend @Scary, who also researches exposed data, and together on a call, we spent several hours digging through our logs and managed to match multiple entries on our logs with data mentioned in the article.

Some of our findings are described in the remainder of this article.

Findings: Overview

Some of the names published by Cybernews may not have been the actual indices, but we managed to match and look at samples for multiple servers.

We only looked for obvious matches between our logs and Cybernews’ datasets, and we likely missed servers, but the matches we did find are sufficient to disprove or at least expect retractions or corrections of Cybernews’ claims.

Any dates and timelines of exposure are based solely on what we verified ourselves with our logs; it’s likely some of them were exposed for longer, as we don’t monitor ElasticSearch closely.

False Claim #1 - A record-breaking breach

As noted above, the issues with the article start with the title itself. For every iteration of the title as Cybernews kept updating it, the following was always mentioned: “record-breaking data breach”.

That’s their first false claim. It’s false because there was no record-breaking 16 billion data breach.

Throwing 30 different and unrelated datasets or dumps into one article doesn't make the report about one huge data breach. It makes it about 30 datasets that may or may not have some common sources or features.

Moreover, the current title of the article reads “16 billion passwords exposed in record-breaking data breach: what does it mean for you?”.

What we think it means is that they didn't bother to check the accuracy of their analyses and reporting. This leads us into the second false claim.

False Claim #2 - 16b credentials, then passwords leaked

Bob Diachenko, the researcher who claims everything in the Cybernews article went through him, has stated on LinkedIn that the article was “not about numbers but the scale” Cybernews must have missed his note about that because the current Cybernews article mentions “16 billion” a total of 18 times over their post, as if it is about the numbers.

And 16 billion what? In its first version, the Cybernews article talked about 16 billion records, all with credentials. When they were challenged about that statement, they later revised it to passwords instead. That, too, was blatantly incorrect.

Inspection of samples of the available datasets in our logs reveals that, despite claiming that all records had credentials, and then revising that to claim all records had passwords, multiple leaks did not contain login credentials or passwords at all.

NPD-Data1 & NPD-Data2

Two entries reported by Cybernews were “NPD-Data1” and “NPD-Data2”. They contained a total of 743,619,650 records from the National Public Data breach that has been dumped and leaked multiple times after NPD refused to pay the extortion demands by the hackers in 2024.

The actual name of the indice for “NPD-Data1” was “npd” and the count matches exactly what they mention. “NPD-Data2” might be related to other indice exposed on the same server called “npd_records” but the records do not match the number that we flagged. This data was wiped and re-imported at some point.

Neither the original NPD leak nor the leak mentioned in the article, as NPD-Data1 & NPD-Data2, include any login credentials.

socialprofiles

One of the 30 datasets in the article, “socialprofiles” shows 75,582,294 records exposed; this data was flagged as exposed for 43 days and contained social media data scrapes.

An indice on our logs named “socialprofilesv2” matches the records from the article, and a sample reveals this, too, was a dataset that had no login credentials or passwords. It contained job information, names, and social media profiles, mostly LinkedIn.

people_stable_v3

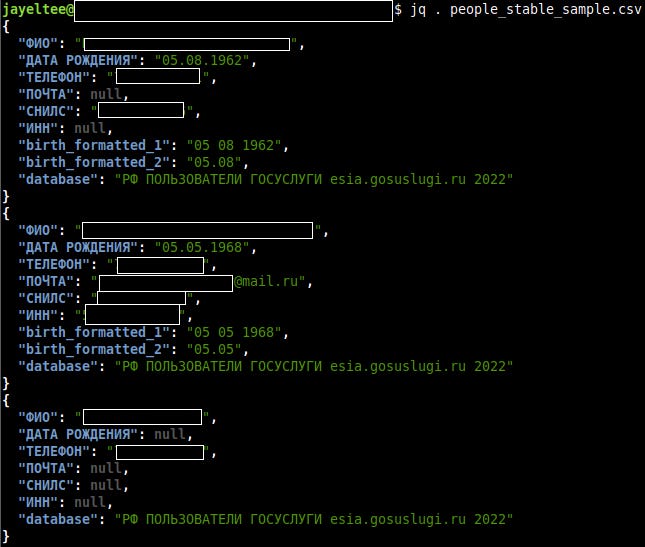

Two entries under people_stable_v3 contained a total of 3,873,145,486 records combined.

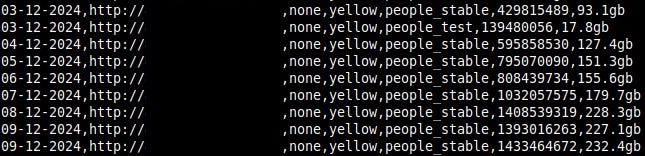

We weren’t able to identify any data on our logs matching those records or with a mention of “v3”, but we identified a server in late 2024, with the indice name “people_stable”. It contained billions of records and was eventually wiped out by wiperware.

The Data exposed in late 2024 shows that people_stable contained data from multiple Russian websites with multiple dumps, with fields such as full name, dob, phone number, email, SNILS, and taxpayer number. We did not see any login credentials or passwords, but we did not examine all of the dumps in the collection.

But even if we exclude the people_stable datasets, it is clear that Cybernews’ claim that ALL records contained login credentials (or passwords) was patently inaccurate.

But we are not done pointing out their false claims.

False claim #3 - Not recycled data or from old breaches

Cybernews claimed the data their researchers found is not simply old data but instead new and previously unseen leaks: “What’s especially concerning is the structure and recency of these datasets – these aren’t just old breaches being recycled. This is fresh, weaponizable intelligence at scale,” researchers said.

As we noted under False Claim #2, some of the datasets were not new at all. As but one example, the NPD data has been leaked multiple times on different forums. Their claim of “fresh” and “new” is also refuted by the fact that some of the servers that did contain infostealer records also contained clear links to txtbase leaks from Telegram. In other words, much of the data in their article they claimed was fresh and weaponizable, was already itself a repackaging of logs extracted from Telegram channels.

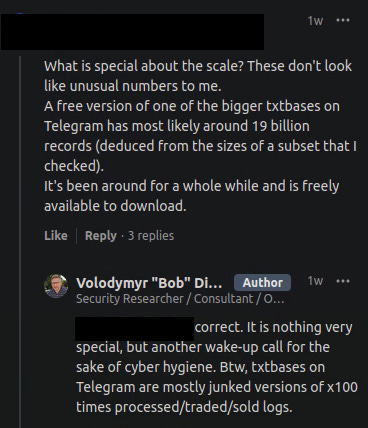

Compilations and txtbase leaks from Telegram aren’t exactly new or even interesting datasets, but don’t take my word for it, check what the researcher responsible for the article said on LinkedIn in a reply to a question about what’s so special about the scale of this.

Diachenko states, “Btw, txtbases on Telegram are mostly junked versions of x100 times processed/traded/sold logs.”.

So, why were they used in this article, and the article somehow does not mention this at all; instead, when it mentions Telegram, it’s to claim that cybercriminals are actively shifting off it.

"The increased number of exposed infostealer datasets in the form of centralized, traditional databases, like the ones found be the Cybernews research team, may be a sign, that cybercriminals are actively shifting from previously popular alternatives such as Telegram groups, which were previously the go-to place for obtaining data collected by infostealer malware," Nazarovas said.

The above quote is another example of how little effort was put into researching any of this. Let’s take a look at some of the indices with infostealer logs.

ctionbudget-unamepass

This indice name is the default name set by an open-source tool; every time this shows in our logs, we chuckle a bit.

The tool does not set a password to protect the ElasticSearch cluster by default. A Google search of the indice name provides the public repo linked to the tool.

Oh no, they shifted off Telegram just to go back to Telegram?!

The data it inserts into ElasticSearch itself and ends up leaked also proves it comes from txtbase leaks, with a field showing the Telegram channel it came from; you wouldn’t even need to know how to use Google search to validate this.

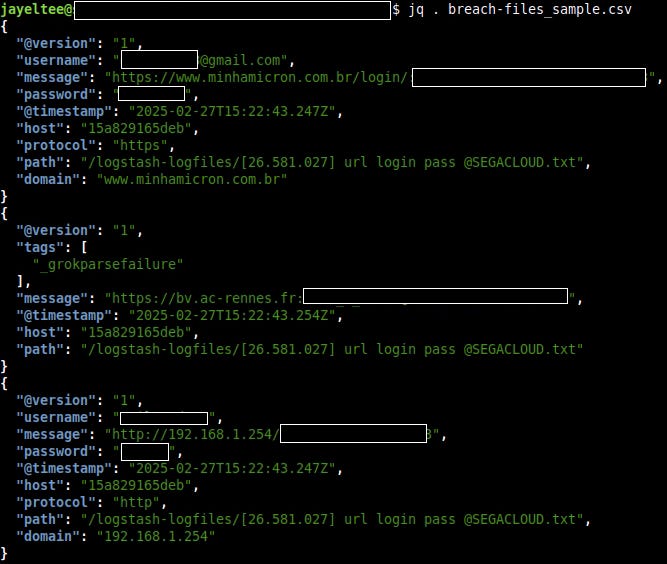

breach-files and stealer_logs

The article lists two entries with the names breach-files and stealer_logs, totaling over 1.2 billion records.

Both servers also showed direct connections to txtbases and repackaged data.

niru / tuga

“The largest one, probably linked to Portuguese-speaking users, contained more than 3.5 billion.”

I’ve mentioned this server being wiped in a post I did about infostealer logs back in February. Back then, I checked some of the data exposed on this server, so I was surprised to read the quote above instead of being factual as to what was exposed.

The reason they used “Portuguese-speaking users” is likely because one of the indices with over 2.1 billion records was named “tuga”, a slang term that is used to refer to people from Portugal, not Portuguese speakers. If they looked at the field “country” that is present in the data, they could have seen that the entries were showing “br”, for Brazil.

But even that might of been inaccurate, back then I saw emails for .in, .id, and other countries in both indices, not a link to “Portuguese-speaking users” which is the official language of over 10 countries.

Cybernews also seems to contradict its own reporting of the datasets being fresh by adding a quote to the article saying that recycled old leaks were present.

“Researchers say most of the leaked data comes from a mix of infostealer malware, credential stuffing sets, and recycled old leaks.” This also leads us to the next false claim.

False Claim #4 - All information comes from Infostealers

Besides claiming the quote above, one of the “Key takeaways” in the article is “The data most likely comes from various infostealers”.

This is later reinforced in the article with things such as “There’s another interesting aspect to this topic. It is a fact that all information comes from infostealers, an incredibly prevalent threat.”

Cybernews is entitled to its own opinions. They are not entitled to their own facts. Their screenshot with indice names disproves their so-called fact with indices like NPD-Data, people_stable, and socialprofiles.

False Claim #5 - All of this was briefly exposed

As Cybernews claimed in its first report:

“The only silver lining here is that all of the datasets were exposed only briefly: long enough for researchers to uncover them, but not long enough to find who was controlling vast amounts of data. Most of the datasets were temporarily accessible through unsecured Elasticsearch or object storage instances.”

Although some datasets cited by Cybernews were only briefly exposed from the time they were first detected, mostly because they got wiped soon after being exposed, according to our logs, other datasets were exposed for months.

The longest exposure we verified was over 5 months; that exposure was for the same server used by a co-worker of Diachenko to write an article about 184 million infostealer logs in late May.

Claiming “all of the datasets were exposed only briefly” is seriously misleading, at best.

Other concerns

We found other concerns, some related to the original article and others related to different articles.

How much of this was reported to the hosts?

The article does not mention any attempts to close any of the servers they found. Did they even report each of these exposed datasets to the hosts? For how many of the datasets did they make any effort to get the data secured or removed?

Because the server running the indice “stealer_logs” was exposed at the time their article came out, when I searched for the counts initially listed, I got a match for this server and noticed the log entry was from earlier that day. I sent an email to the abuse contacts for the IP address not long after the article was published, and the access was blocked within a few hours.

"logins” was also exposed but had been wiped and left with only a ransom note the day before the article was published. Eventually, around June 21st, the owner noticed the data was gone and shut it down.

Given the interest in their article, numerous people wanted to know where to find the leaks and how to get them. If Cybernews reported on data that was discoverable and unsecured, then have they put people at risk of fraud or other harms?

Irresponsible reporting

Publishing about things they don’t bother closing is nothing new for Cybernews either. Both DataBreaches.net and I have sent them comments in the past about their failure to report whether they had even tried to get still-unsecured data locked down.

Inflating numbers in other articles

In an article about a leak involving 1.1m files exposed by beWanted that was found by the Cybernews team, they mention, “The team believes that a data leak involving over a million files, with each one likely representing a single person, represents a critical security incident for beWanted.”

I had the file listing for this server, which was exposed at least since July 2023, according to my logs, so I ran a simple command to list the files per directory.

A simple directory listing and count, and I could see that there were over 560,000 files in cv-files and over 500,000 in profile-images.

What kind of research was done here that the team can’t identify half the files were profile pictures and not likely one file per person?

Final comments and some food for thought

Cybernews added an FAQ to their article in one of their updates, addressing some of the concerns others raised with their initial reporting. The FAQ also addresses some of the issues raised in this post, but simply adding an FAQ to the article without correcting any wrong or misleading information within it has little to no meaning.

Diachenko is not sure why the article made by the other co-founder of his company about the 184m leak that, according to him, was only exposed for a couple of weeks, gained so much attention.

I’m not either, but I’ll leave you with some food for thought and tell you to take a look at the order of how servers were added to the sheet by Diachenko and my dates, and then the dates around the entry related to the 184m leak(#15), eventually closed in late May.

Maybe more attention should be given to that article now that both Cybernews and my post have been published.

One last reminder to everyone, it’s not about the numbers but the scale. Now, say 16 billion and record-breaking one more time with me.

If you’re interested in incidents I dealt with, you can check all my public finds indexed by country on the post below: